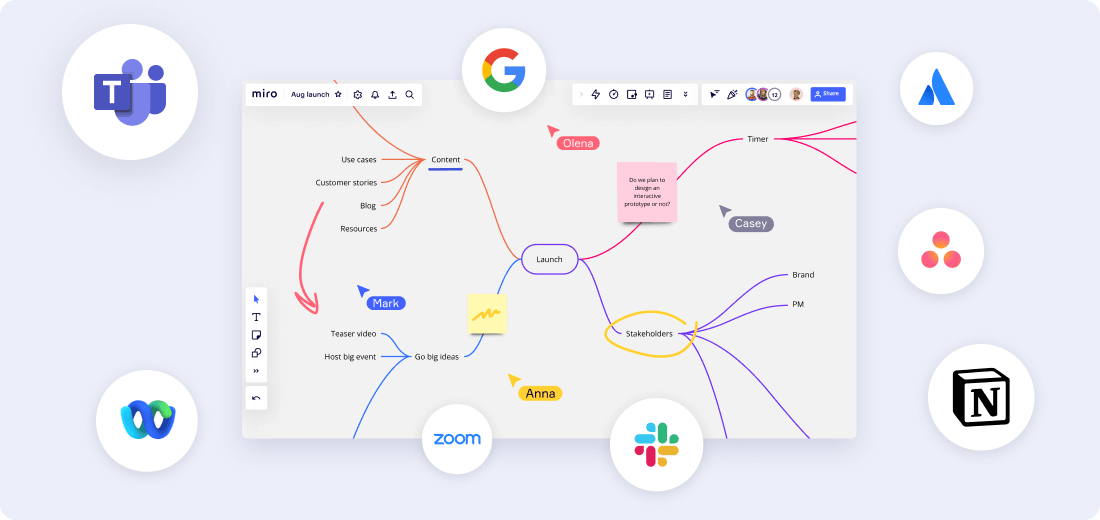

แอปและการผสานรวมมากกว่า 130 รายการ

เชื่อมต่อเครื่องมือของคุณและปิดแท็บของคุณด้วยแอปที่คุณใช้และชื่นชอบอยู่แล้ว

Skip to:

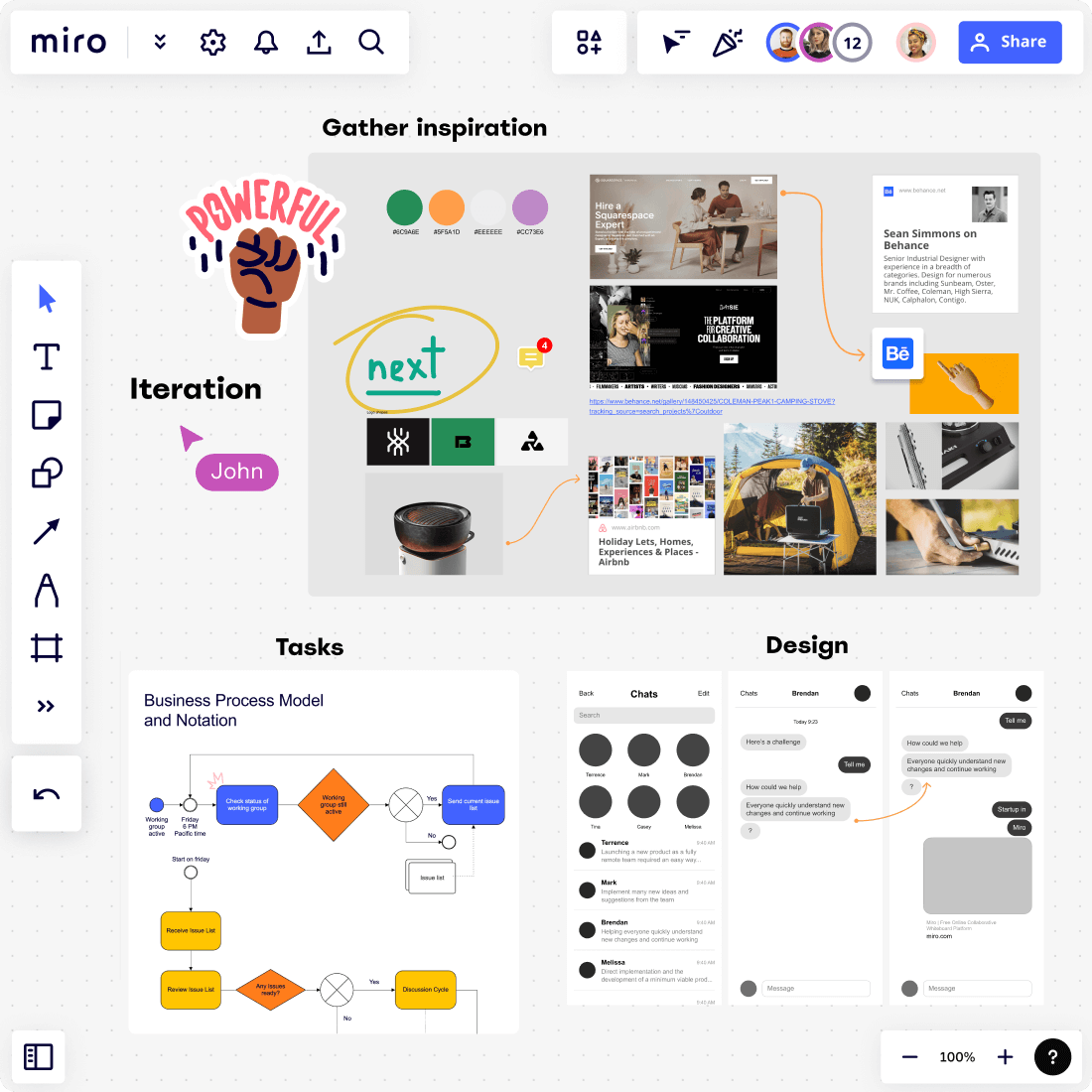

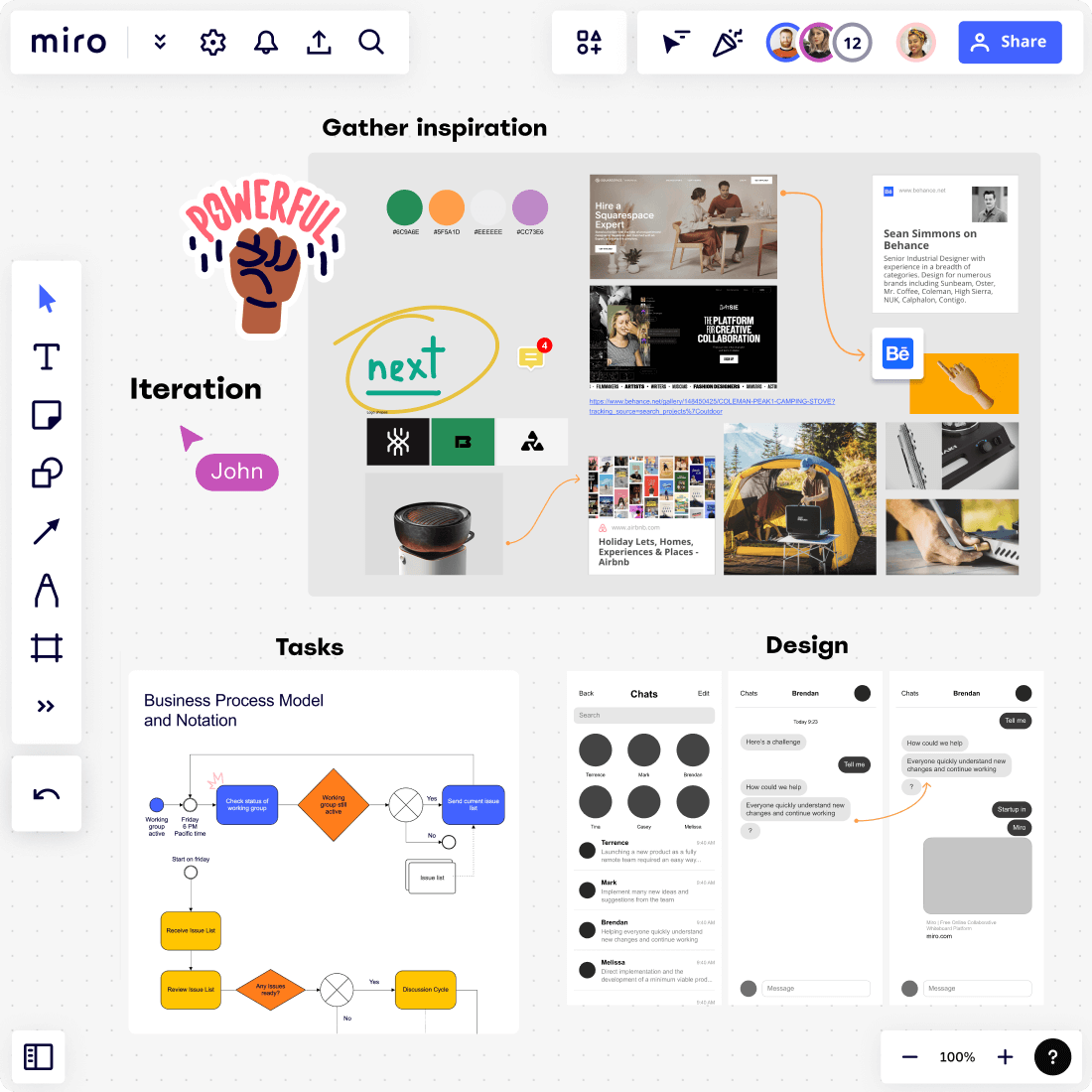

ตั้งแต่การระดมสมองกับทีมข้ามสายงานของคุณไปจนถึงการรวบรวมข้อเสนอแนะสำหรับการทำซ้ำและการทำซ้ำอีกครั้ง สร้างประสบการณ์ของผลิตภัณฑ์ที่มีความมหัศจรรย์มากมาย

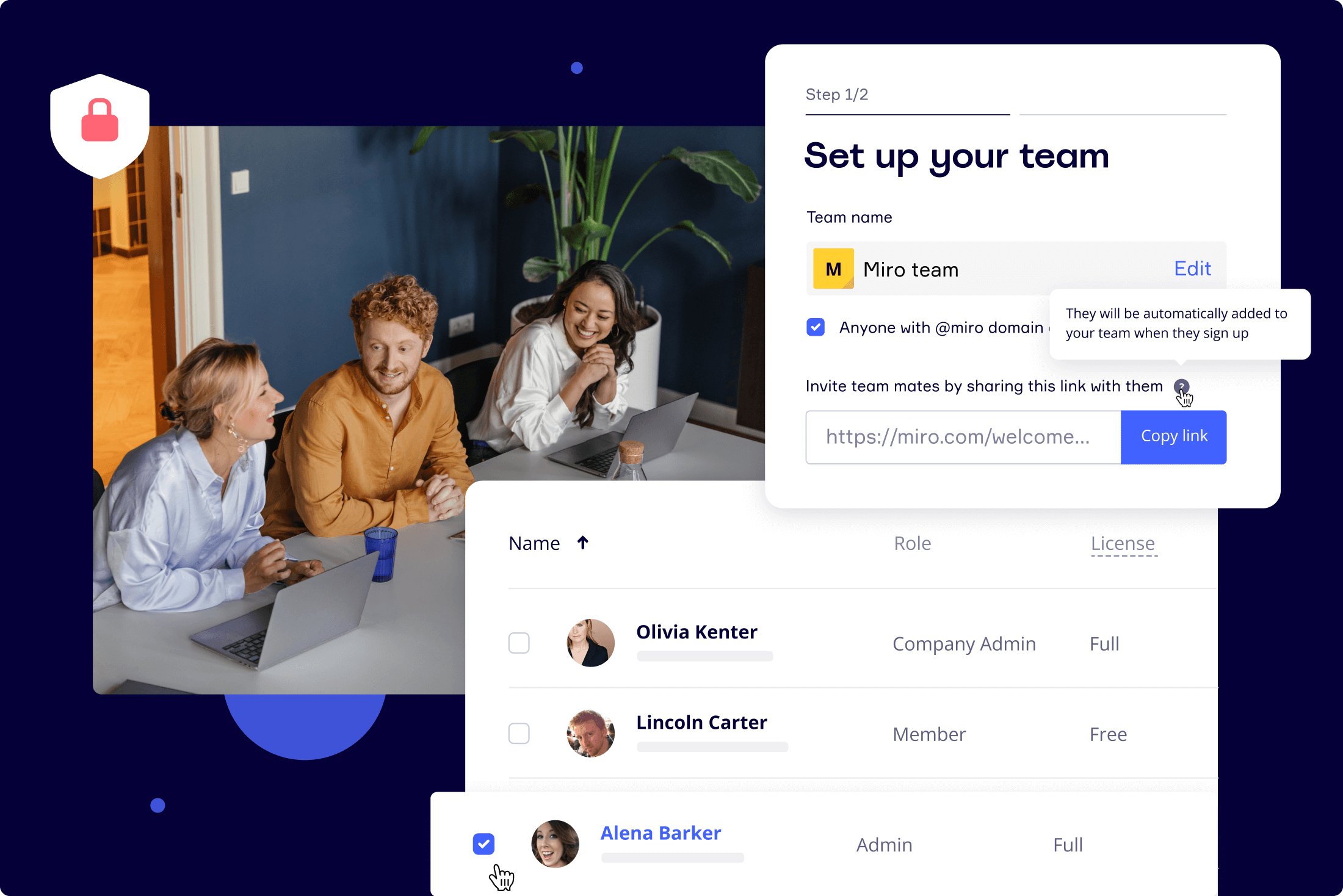

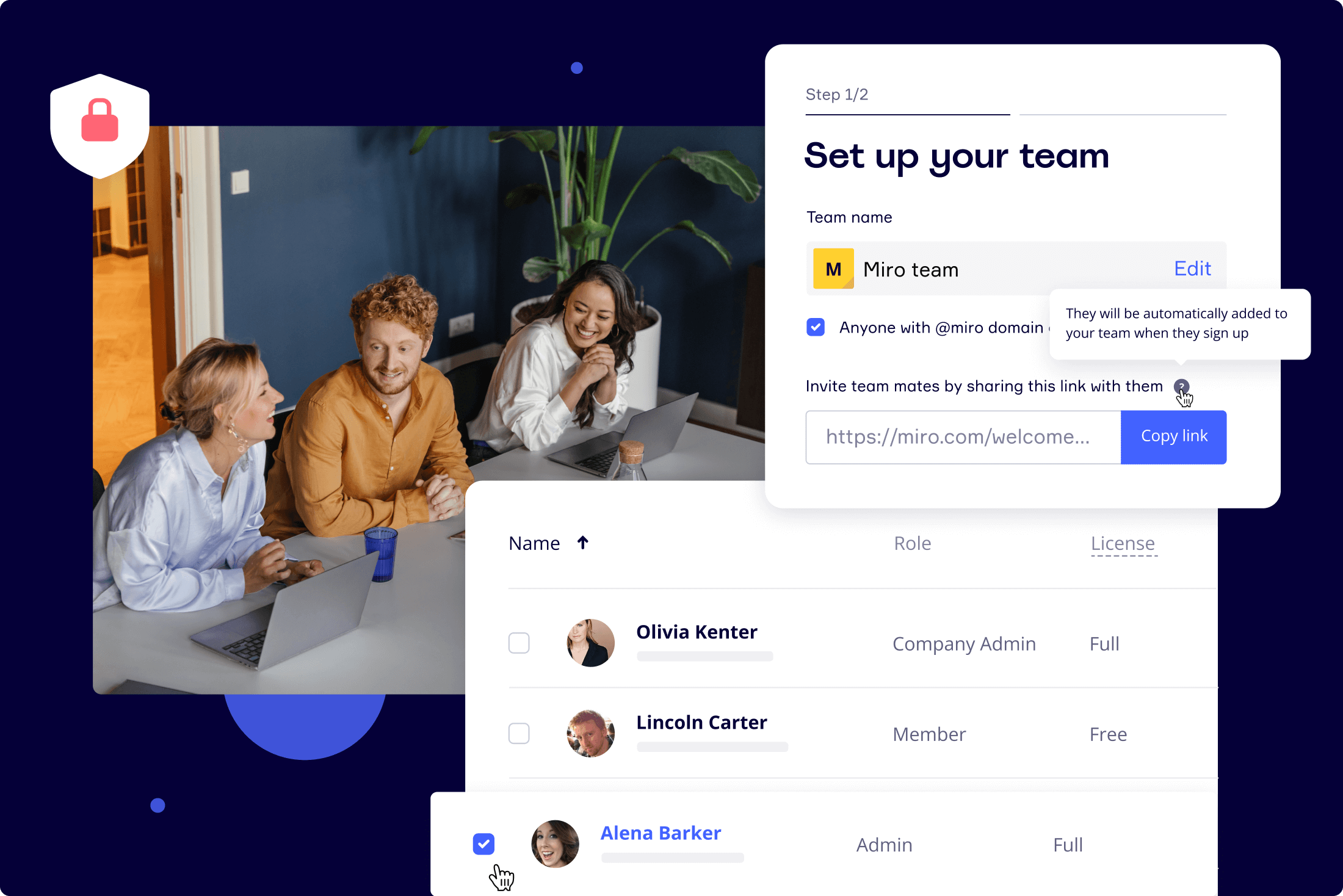

ประสานการทำงานร่วมกับทีมของคุณภายในไม่กี่นาที

ผู้ใช้ 70 ล้านคนทั่วโลกไว้วางใจ Miro

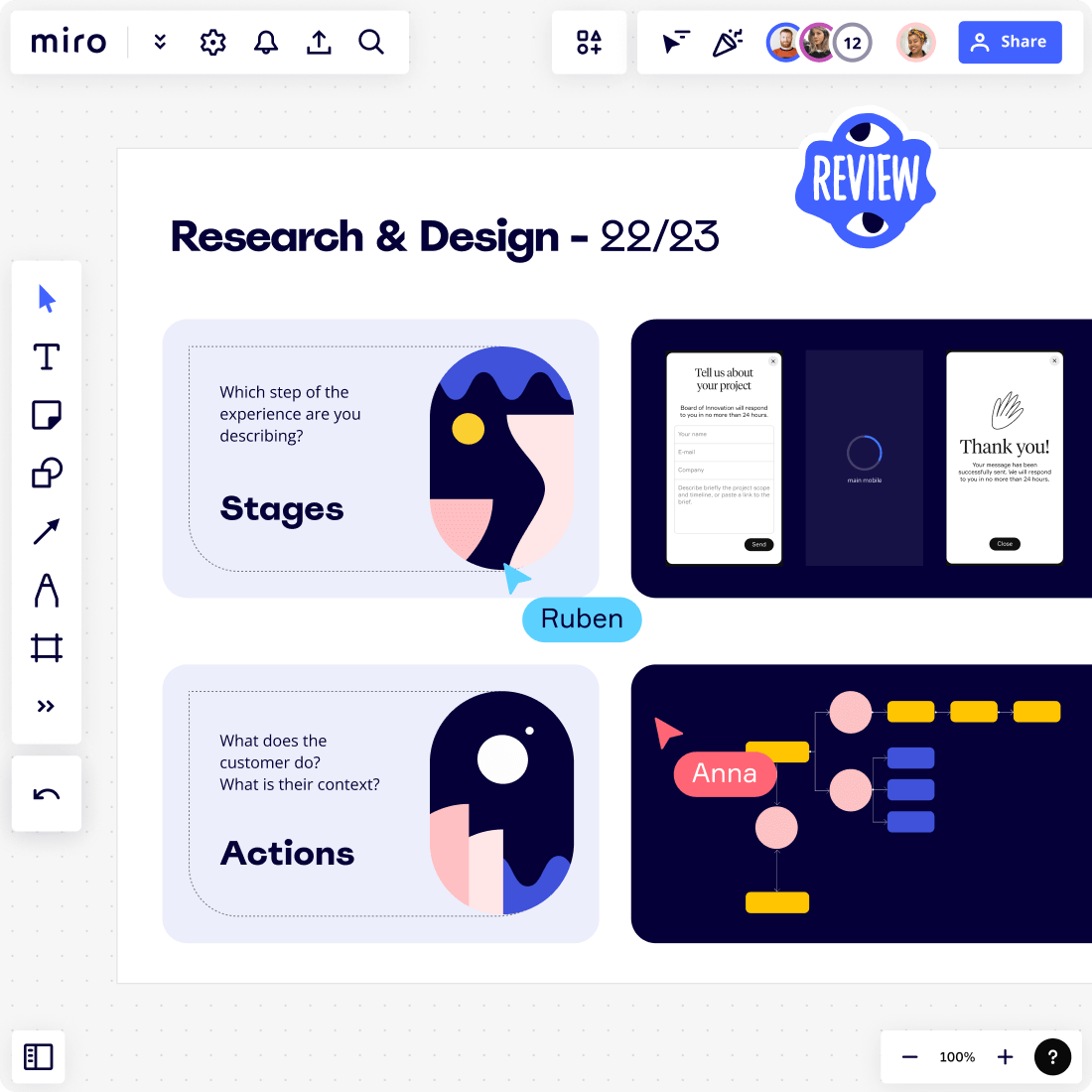

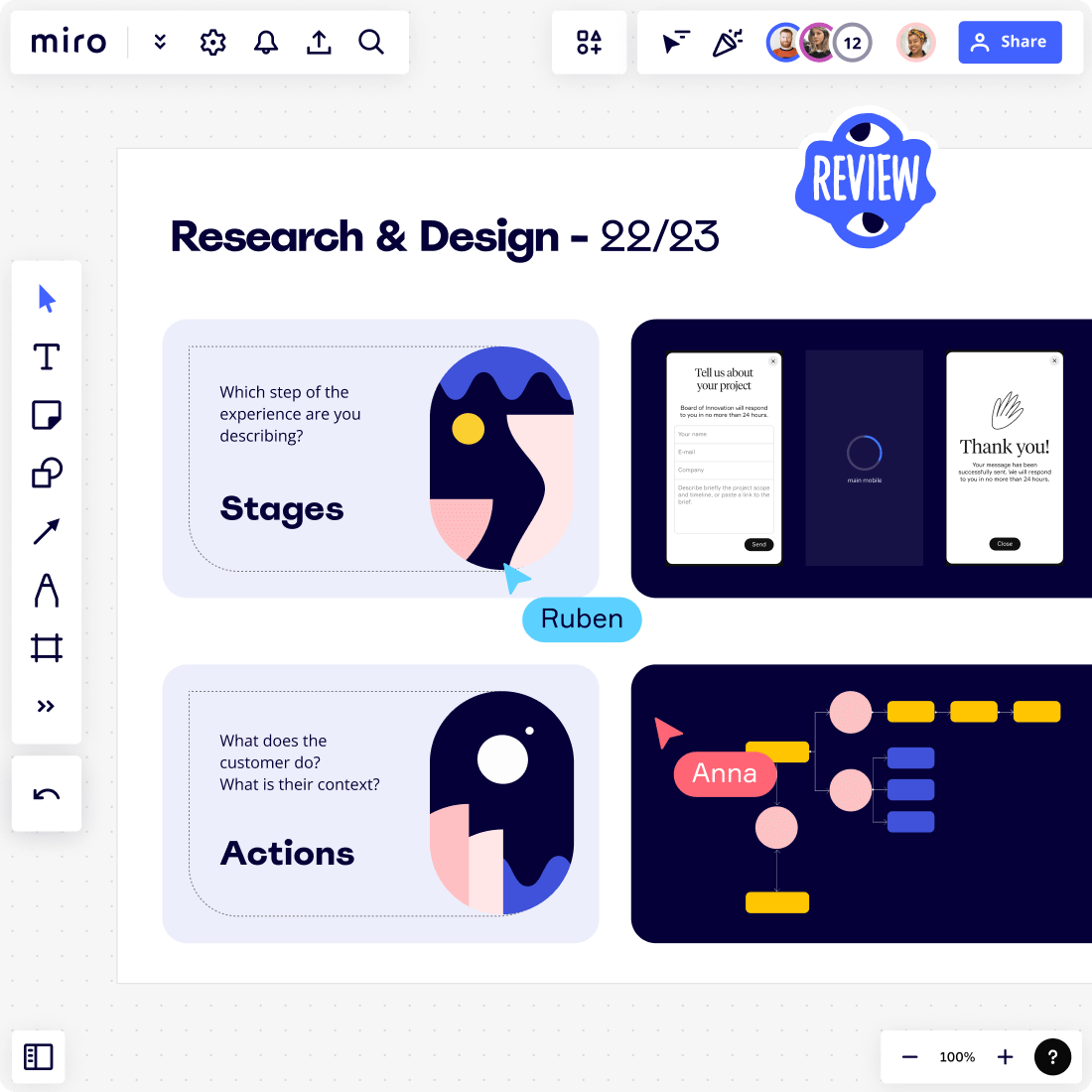

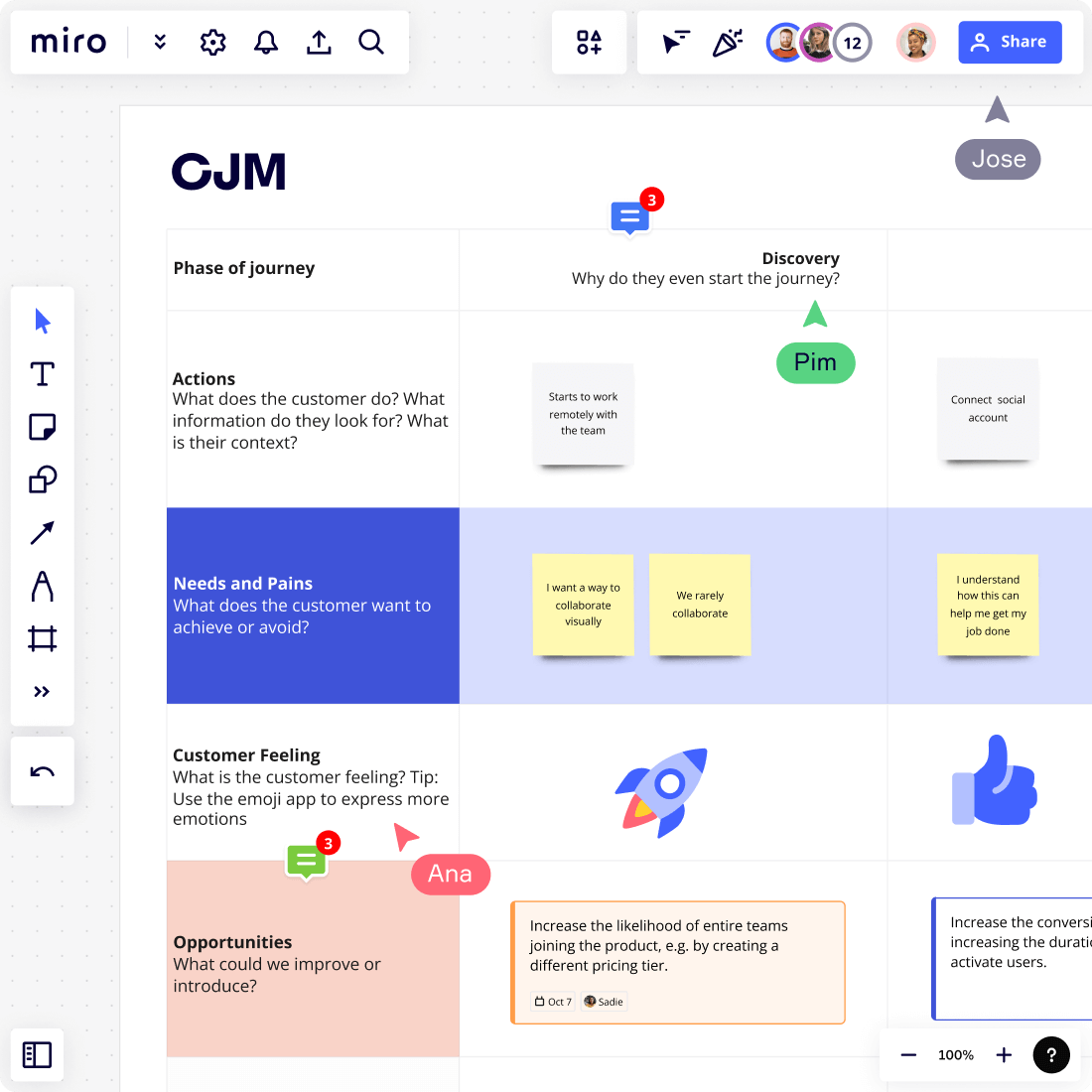

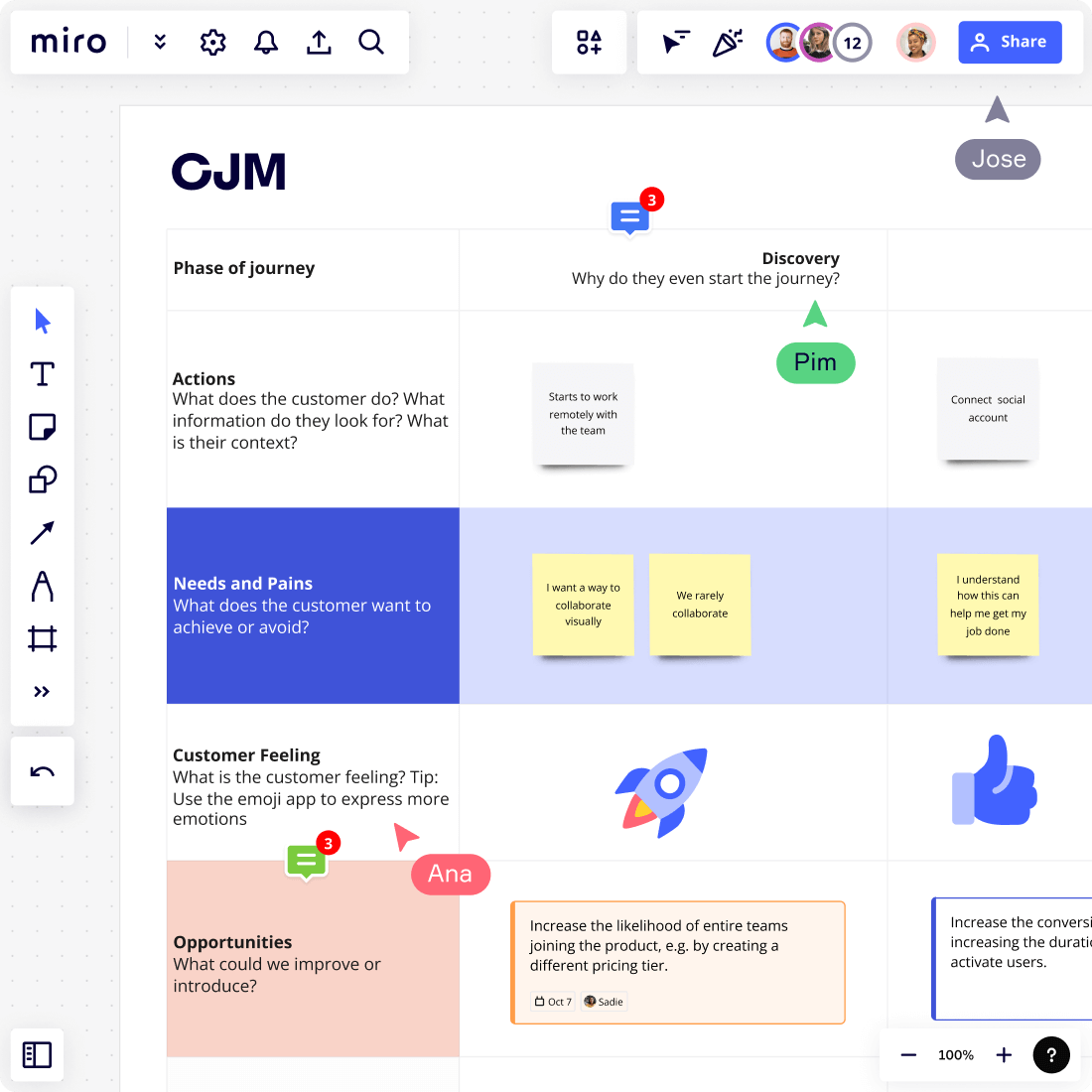

นำเสนอสิ่งที่คุณค้นพบออกมาเป็นภาพและให้เข้าใจง่ายโดยการรวมศูนย์และจัดระเบียบข้อมูลการวิจัยไว้บนบอร์ด Miro เพียงบอร์ดเดียว

นำมุมมองของลูกค้าเข้าสู่การปรึกษาหารือทางเทคนิค บนแคนวาสที่ไร้ขอบเขตของ Miro คุณสามารถนำไปสู่การพูดคุยเกี่ยวกับความต้องการของผู้ใช้ ระดมสมองในการแก้ปัญหา และปรับปรุงความสอดคล้องของประสบการณ์

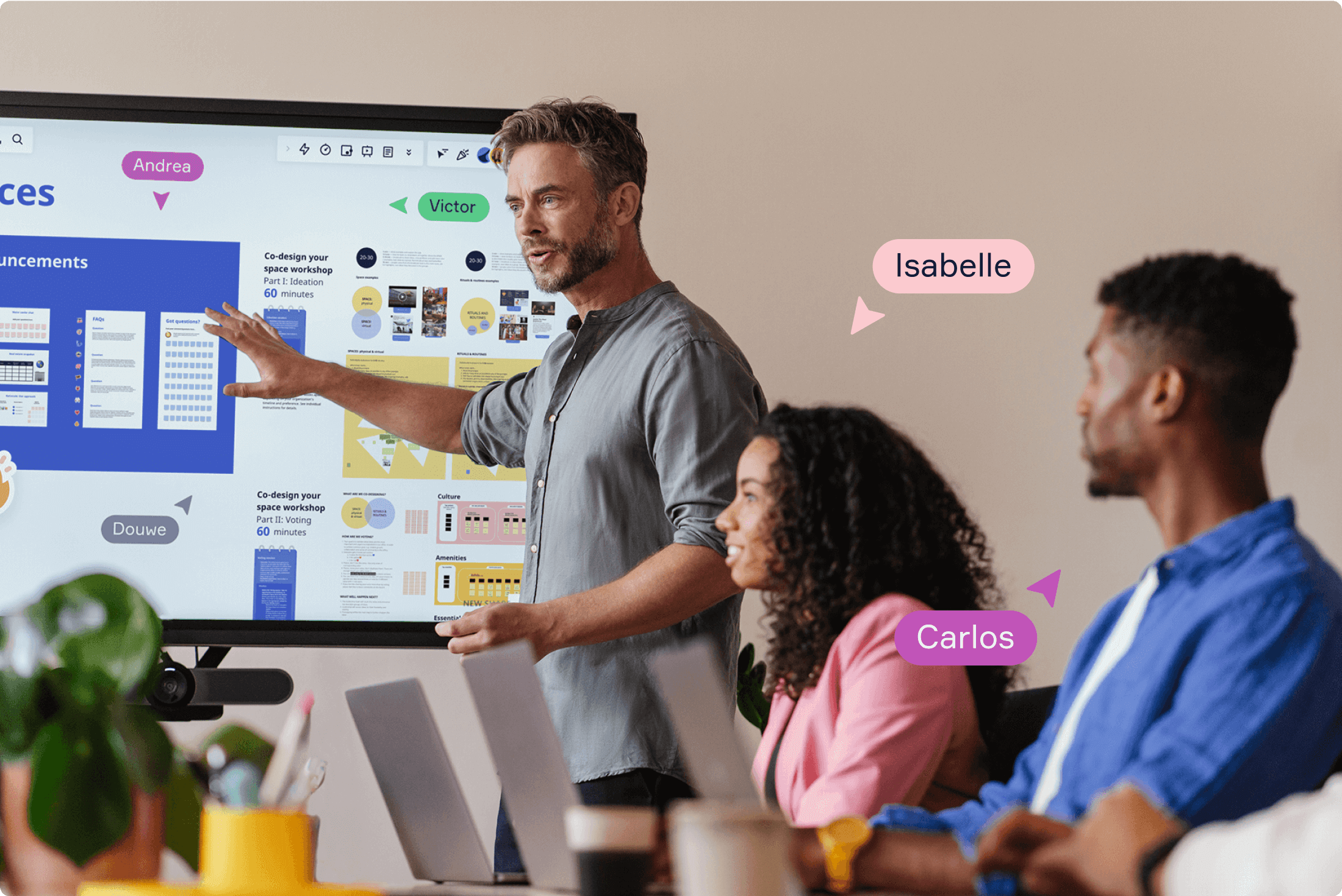

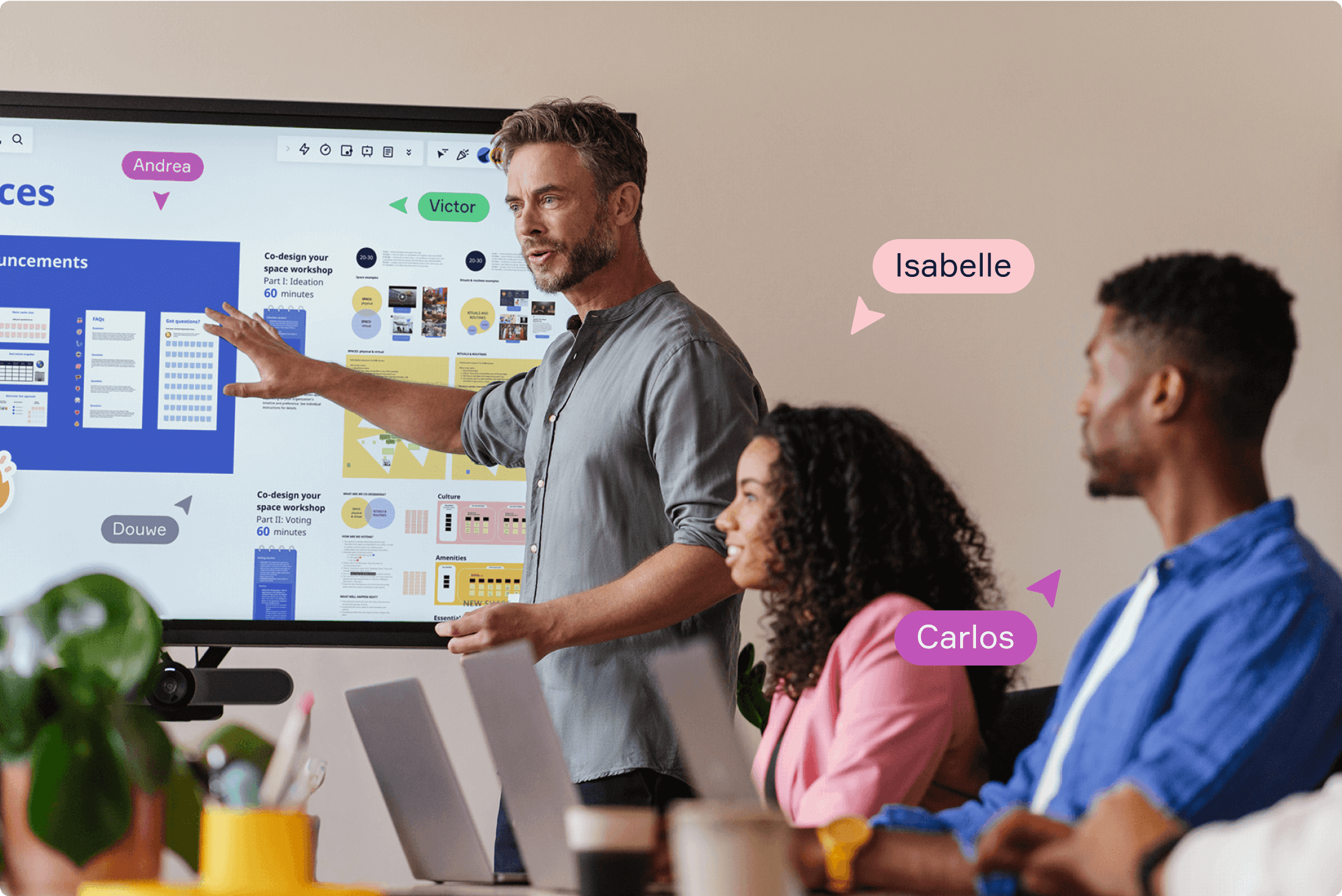

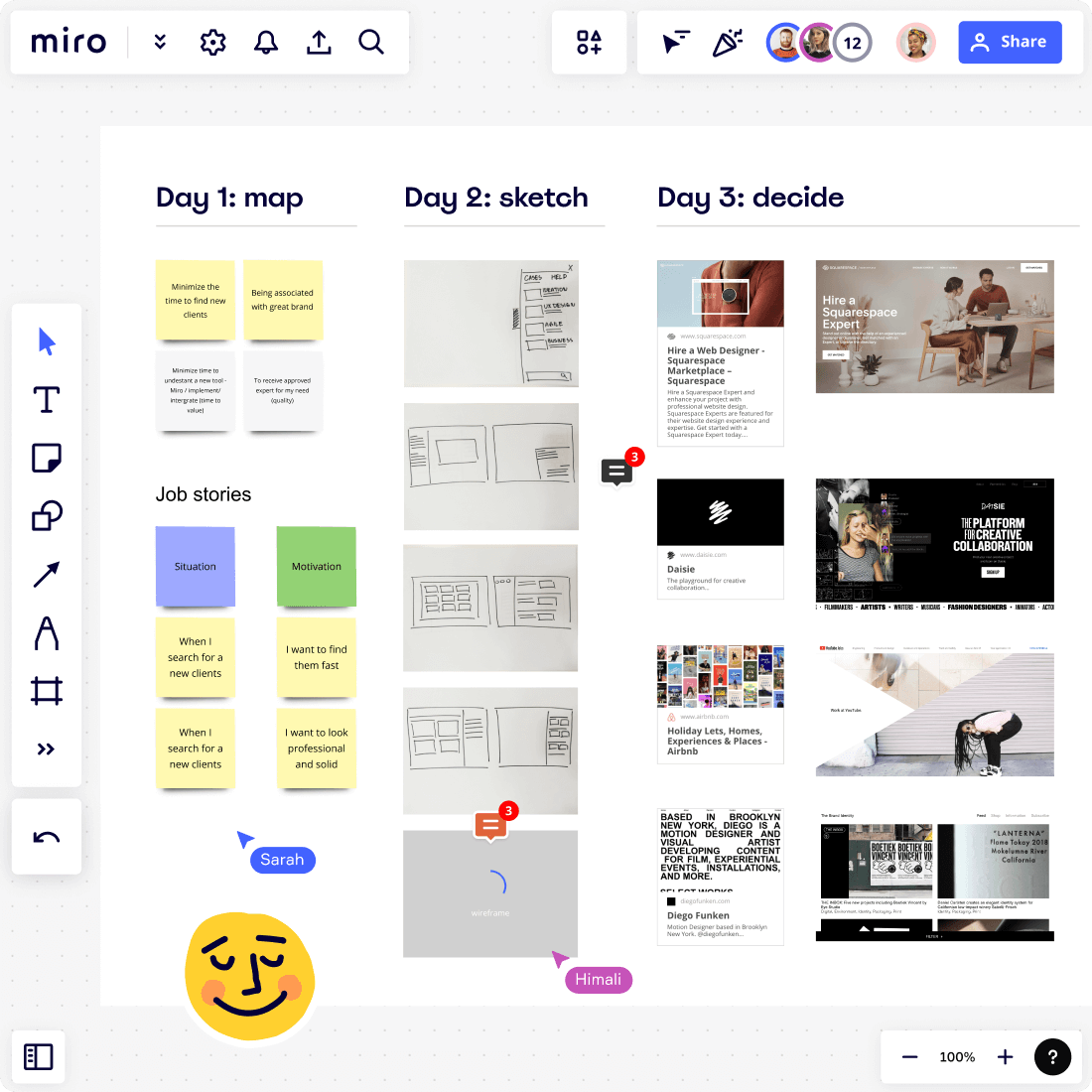

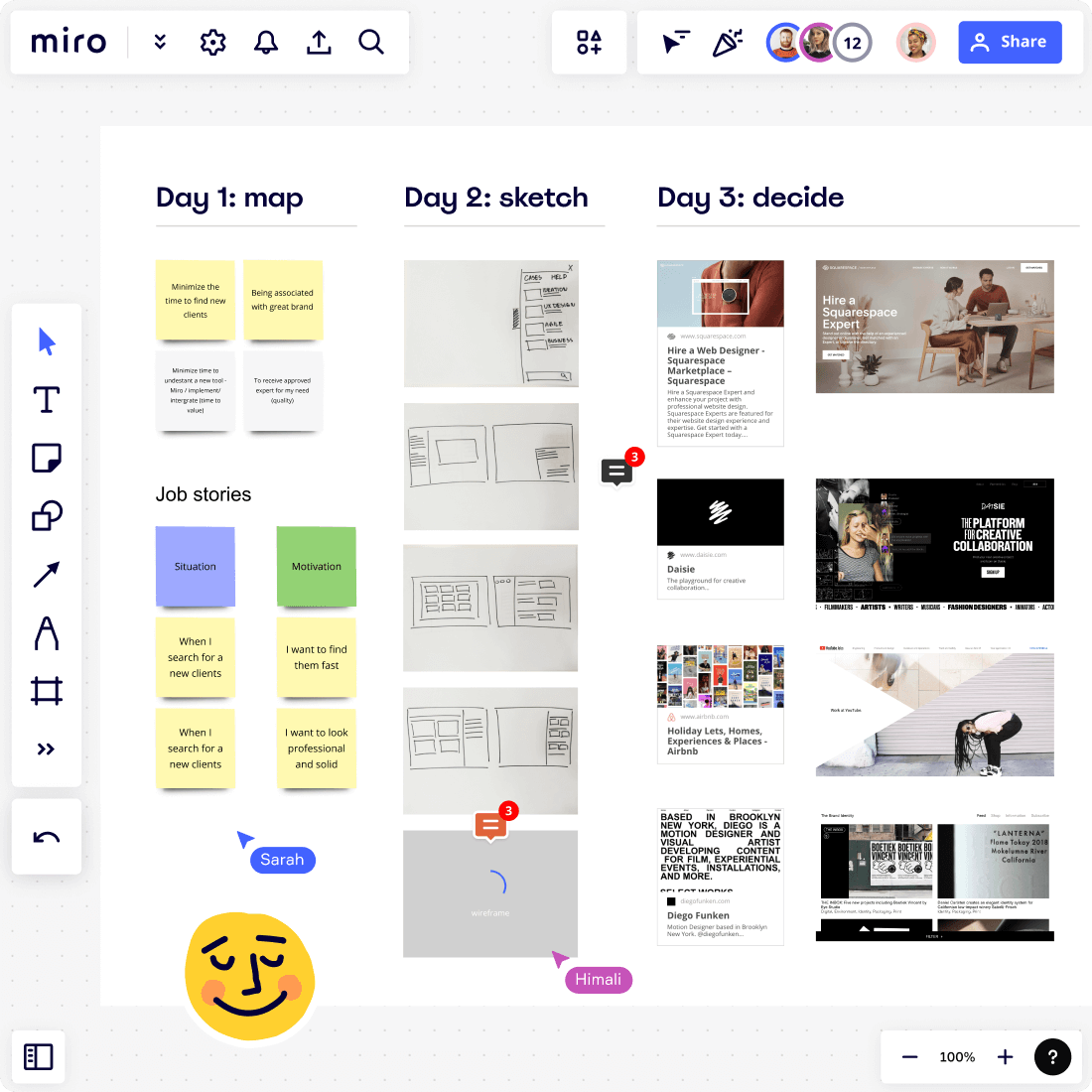

อย่าให้ระยะทางมาขวางกั้นทีมของคุณ แคนวาสที่ไร้ขอบเขตของ Miro ทำให้การออกแบบเป็นไปอย่างมีประสิทธิภาพและมีส่วนร่วมสำหรับทุกคน ไม่ว่าพวกเขาจะนั่งอยู่ข้างหน้าคุณหรือหน้าจอก็ตาม

ส่งเสริมเวิร์กโฟลว์ที่ส่งเสริมแนวคิดและมุมมองใหม่ ๆ การเชิญเพื่อนร่วมทีมของคุณเข้าร่วมบอร์ดเพื่อระดมสมองและข้อเสนอแนะจะนำไปสู่แนวคิดที่ดีขึ้น วัฒนธรรมของทีมที่แข็งแกร่งขึ้น และมีอิทธิพลที่มากขึ้นในองค์กร

ทีมงานทุกขนาดกำลังสร้างนวัตกรรมและดำเนินการได้เร็วกว่าที่เคย ด้วยการป้องกันระดับองค์กร 99% ของบริษัทที่อยู่ใน Fortune 100 ไว้วางใจ Miro

เชื่อมต่อเครื่องมือของคุณและปิดแท็บของคุณด้วยแอปที่คุณใช้และชื่นชอบอยู่แล้ว

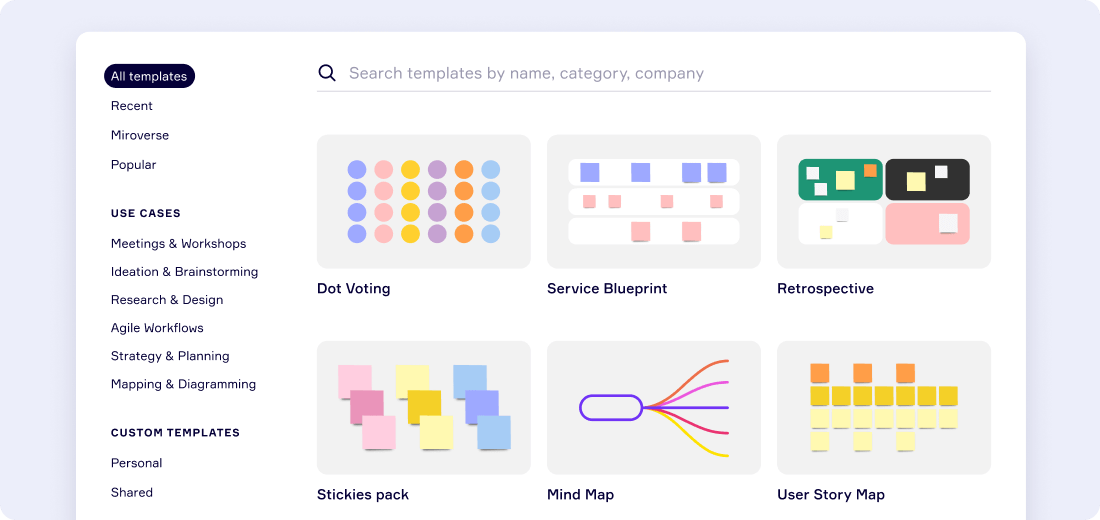

ประหยัดเวลาด้วยเฟรมเวิร์กสำเร็จรูปและเวิร์กโฟลว์ที่ผ่านการพิสูจน์แล้ว

เข้าร่วมทีมงานนับพันโดยใช้ Miro เพื่อทำงานให้ดีที่สุด

ประสานการทำงานร่วมกับทีมของคุณภายในไม่กี่นาที